In today’s workplace, AI tools are everywhere, from writing emails to screening job applicants. But while AI promises speed and efficiency, it also brings serious risks when used without proper guidance.

Just ask Salesforce, which recently found itself at the centre of a massive extortion attempt. Hackers claimed to have accessed data from over 700 major companies, including Google, Disney, and Toyota, by exploiting integrations with third-party AI tools. The attackers threatened to leak 1 billion records unless a ransom was paid, a stark reminder that even the most trusted platforms can become liabilities when AI is used without oversight. [Cybernews]

And it’s not just about data leaks. Workday, a widely used HR platform, is facing a class-action lawsuit over claims that its AI-powered hiring tools discriminated against applicants based on age, race, and disability. The case has sparked a broader conversation about how unchecked AI can quietly reinforce bias at scale — and how employers may be held accountable. [Forbes]

These incidents aren’t isolated. A recent report found that AI is now the #1 channel for corporate data exfiltration, with 40% of files uploaded to generative AI tools containing sensitive personal or financial information. Even more concerning? Most of this data is being shared through unmanaged personal accounts, completely outside the view of IT or compliance teams. [The Hacker News]

So, can you trust AI?

The answer is: sometimes, but only with the right knowledge and safeguards in place.

AI is a powerful tool, but it’s not infallible. It can hallucinate, mislead, or reflect the biases in the data it was trained on. And when employees use AI without understanding its limitations, the consequences can range from embarrassing errors to serious legal and reputational damage.

That’s why human oversight, ethical awareness, and data privacy aren’t optional, they’re essential.

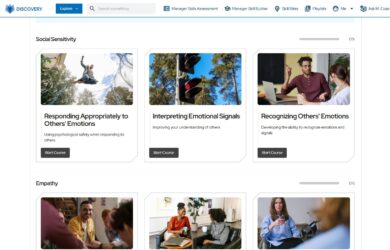

Upskilling starts here: AI & Cyber-Security Skills Accelerator Bundle

To recognise Cyber Security Month at Mindtools and Kineo, we’ve developed a powerful training bundle, to help organizations tackle today’s most urgent digital threats, from AI misuse to data protection. It includes a mix of new Kineo Shorts and Mindtools expert-led courses, teaching you how to evaluate AI outputs critically and decide when to trust — or question — what AI tells you.

The bundle includes:

AI Privacy Essentials

• Understand the risks of oversharing with AI tools and build habits that protect personal and professional data.

Human Oversight in AI

• Discover why human judgment is still essential in AI decision-making and how to apply a simple oversight framework.

AI Basics: Know Its Strengths, Spot Its Limits

• A jargon-free introduction to what AI can (and can’t) do — perfect for building confidence and avoiding common pitfalls.

Mindtools: Cyber Security 101

• Cyber Security 101 is for anyone seeking a clear, straightforward introduction to how to prevent cyber threats before they happen.

Mastering AI for Managers – With Markus Bernhardt

• In this seven-part course, Dr Markus Bernhardt, a globally recognised AI strategist and tech visionary, explains how every manager can elevate how they strategize, write, innovate, collaborate, and lead.

These courses are designed for busy professionals. They’re the perfect first step toward building a culture of responsible AI use in your organisation.

Don’t wait for a headline to make AI safety a priority.

Start upskilling your teams today with Mindtools and Kineo AI & Cyber-Security Skills Accelerator Bundle . Build stronger managers and leaders, our experts are always happy to have a no-obligation conversation.